In the fast-paced world of technology, advancements are made daily that push the boundaries of innovation. However, with these advancements come new challenges and threats. Today, we are witnessing the emergence of the first virus developed specifically for artificial intelligence. ChatGPT and the poisoning of Google Gemini are just the beginning of what could be a new era of cyber threats targeting AI systems.

What is ChatGPT and how does it work?

ChatGPT is an AI-powered chatbot developed by OpenAI that has gained widespread popularity for its ability to engage in human-like conversations. This advanced AI model is based on the GPT-3 architecture and is designed to understand and generate text based on the context of a conversation. However, what sets ChatGPT apart is its vulnerability to malicious attacks due to its reliance on input from users to learn and improve its responses.

Researchers Create Malicious AI ‘Worm’ Aimed at Generative AI Systems

A team of researchers has made a shocking discovery by creating a harmful artificial intelligence (AI) ‘worm’ that is meant to attack generative AI systems. This new and worrisome invention brings up important issues about how safe and reliable AI technologies are, especially since they are being used more and more in areas like healthcare, finance, and the creative arts.

The first virus for artificial intelligence

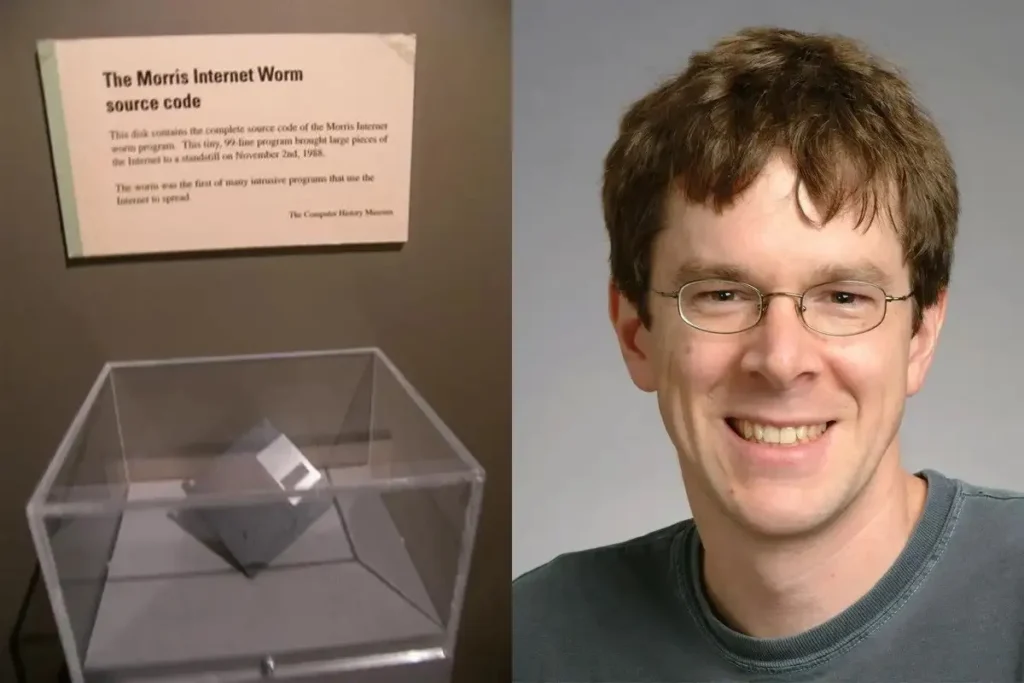

A new cybersecurity threat has emerged with the “Morris II” worm, a sophisticated malware that uses popular AI services to spread, infiltrate systems, and steal sensitive data. Named after the disruptive Morris worm of 1988, this development highlights the urgent need to strengthen AI models against such vulnerabilities

Morris II, the inaugural virus designed for artificial intelligences, led to the leakage of personal information and the dissemination of spam emails by AI email assistants in a controlled testing environment.

Researchers have identified a novel “zero-click” AI worm capable of exploiting ChatGPT, Gemini, and the open-source AI model LLaVA through a malicious self-replicating prompt utilizing text and image inputs.

This worm can propagate its attack across various models by taking advantage of interconnectivity within the AI ecosystem, facilitating phishing attacks, spam email distribution, and the spread of propaganda.

This research seeks to demonstrate that all software, including large language models within GenAI tools, are vulnerable to computer virus threats.

Morris 2, created by Cornell University researchers, Intuit, and Technion, is named after the Morris Worm, one of the earliest self-replicating computer worms, developed by Robert Morris in 1988.

The Morris Worm was responsible for crashing approximately 10% of the computers connected to the internet at that time.

A new worm uses a tricky self-copying method

This makes it harder to stop and control

Researchers from Cornell Tech, the Israel Institute of Technology, and Intuit developed a novel technique known as an “adversarial self-replicating prompt” to engineer a computer worm. This technique involves inputting a specific prompt into a large language model (LLM), which they evaluated using platforms such as OpenAI’s ChatGPT, Google’s Gemini, and the open-source LLaVA model, a collaborative effort by the University of Wisconsin-Madison, Microsoft Research, and Columbia University.

The adversarial self-replicating prompt is designed to manipulate the LLM into generating additional prompts autonomously. This manipulation occurs when the initial prompt is processed, leading the model to produce its own set of malicious prompts that it subsequently executes, thereby creating a self-propagating cycle of harmful instructions.

The mechanism by which this worm operates is akin to well-known cybersecurity vulnerabilities, such as SQL injection and buffer overflow attacks. These methods exploit weaknesses in software systems, allowing the adversarial prompt to infiltrate the model’s operational framework and execute potentially harmful commands without direct human intervention.

The worm possesses two primary functions:

1. Data theft: This malicious software is capable of siphoning off sensitive personal information from the email accounts of infected systems, such as names, phone numbers, credit card information, and social security numbers.

2. Spam distribution: The worm can create and dispatch spam and other harmful emails using compromised AI-driven email assistants, facilitating its spread to additional systems.

Compromised artificial intelligence databases

The researchers illustrated the text prompt methodology by composing an email that contained an adversarial text prompt, effectively “poisoning” the AI email assistant’s database through the use of retrieval-augmented generation (RAG). This technique allows the large language model (LLM) to access and utilize external data sources. Upon receiving the email, the RAG forwarded it to the LLM provider, which subsequently generated a response that compromised the AI service, extracted sensitive information from the emails, and propagated the infection to new hosts when the LLM was employed to respond to another client’s email.

In a separate experiment involving images, the researchers embedded a self-replicating prompt within an image file, which prompted the email assistant to disseminate the message to additional email addresses. This image functioned as both the content—potentially containing spam, scams, propaganda, disinformation, or abusive material—and as the activation mechanism that facilitated the spread of the malicious worm.

The researchers have indicated that this development signifies a novel category of cybersecurity threat, particularly as AI systems continue to evolve and become more interconnected. The laboratory-engineered malware exemplifies the ongoing vulnerabilities inherent in LLM-based chatbot services, highlighting their susceptibility to exploitation for harmful cyberattacks. OpenAI has recognized this vulnerability and is actively engaged in efforts to enhance the resilience of its systems against such attacks.

Final Note: Finding harmful AI ‘worms’ that attack generative AI shows we need to think about ethics as we develop new tech. As scientists explore AI more, it’s crucial for everyone to stay alert about cybersecurity risks. By controlling the spread of digital and AI tools, we can create a safer future for everyone.

Additional Information:

What Is an AI Worm?

An AI worm is a type of malware that leverages artificial intelligence to enhance its propagation and effectiveness. Capable of self-replicating, it can quickly spread across networks and devices, utilizing AI techniques to evade detection and adapt to security measures.

AI Worms Explained

AI worms are a new type of malware that uses artificial intelligence to spread and steal information. Unlike traditional malware, an AI worm doesn’t rely on code vulnerabilities. Instead, it manipulates AI models to generate seemingly harmless text or images containing malicious code.

The recently developed “Morris II” AI worm works by using adversarial self-replicating prompts. These prompts trick AI systems into generating responses containing the malicious code. When users interact with the infected response, such as replying to an email, their machines become infected.

Key capabilities of AI worms like Morris II include:

- Data Exfiltration: AI worms can extract sensitive data from infected systems, including names, phone numbers, credit card details, and social security numbers.

- Spam Propagation: An AI worm can generate and send spam or malicious emails through compromised AI-powered email assistants, helping spread the infection.

While Morris II currently exists only as a research project in controlled environments, it demonstrates potential security risks as AI systems become more interconnected. Researchers warn that developers and companies need to address these vulnerabilities, especially as AI assistants gain more autonomy in performing tasks on users’ behalf.

Characteristics of AI Worms

AI worms are, well, intelligent. They possess abilities to learn from interactions and dynamically adjust strategies to dodge security measures.

Adaptability

AI worms adapt to different environments and security measures. They analyze the security protocols of the systems they encounter and modify their behavior to avoid detection. For instance, if an AI worm encounters a firewall, it may change its communication patterns to mimic legitimate traffic, thus slipping past the firewall undetected.

Learning

AI worms utilize machine learning algorithms to improve their effectiveness. They collect data from their environment and learn which strategies work best for spreading and avoiding detection. For example, an AI worm might analyze failed attempts to penetrate a network and adjust its methods based on what it learns, increasing its success rate over time.

Propagation

AI worms use sophisticated algorithms to identify the most efficient ways to spread. They analyze network structures and pinpoint vulnerabilities to exploit. This might involve using social engineering tactics to trick users into downloading malicious attachments or exploiting known software vulnerabilities to gain access to new systems.

Advanced Evasion

AI worms continuously change their signatures and behaviors to evade detection. Traditional security systems rely on recognizing known malware signatures, but AI worms can generate new signatures on the fly, making them difficult to detect. They might also mimic the behavior of legitimate software processes to blend in with normal network traffic.

Targeted Attacks

AI worms can be programmed to target specific systems or organizations. They gather intelligence on their targets, such as identifying critical infrastructure or high-value data. A targeted approach allows them to cause maximum damage or exfiltrate sensitive information with higher precision.

Automated Exploitation

AI worms automate the process of finding and exploiting vulnerabilities. They scan networks for weak points and deploy exploits faster than human hackers can. This automation allows them to scale their attacks and compromise a large number of systems in a short period.

By leveraging these intrinsic characteristics, AI worms pose a significant threat to cybersecurity. Understanding these traits enables us to develop more effective defenses and mitigate the risks associated with such advanced malware.

Traditional Worms Vs. AI Worms

Traditional worms have been around a long while. As security teams know, they follow predefined rules and patterns, which make them less flexible and easier to detect once their signature is known. An AI worm, however, stands out from traditional worms primarily because they use machine learning algorithms to learn from their environment and adapt their behavior in real time.

When AI worms encounter new security measures, they adjust their strategies to overcome the obstacles. They also excel in evasion techniques. They continuously change their signatures and behaviors to evade detection. By mimicking legitimate network traffic or software processes, they blend in seamlessly and avoid triggering security alerts. Traditional worms, in contrast, usually have static signatures and behaviors, making them more susceptible to detection by signature-based antivirus programs.

In terms of propagation, AI worms use sophisticated algorithms to identify and exploit the most efficient paths. They employ advanced techniques such as social engineering and network vulnerability scanning to spread quickly and effectively. Traditional worms often rely on simpler methods, such as exploiting well-known vulnerabilities or using predictable spreading mechanisms.

AI worms also exhibit a high degree of targeting precision. They gather intelligence on their targets, enabling them to launch precise attacks on specific systems or organizations. This targeted approach maximizes their impact and effectiveness. Traditional worms generally spread indiscriminately, affecting any vulnerable system they encounter, which can make them easier to detect and contain.

In addition, AI worms automate the process of finding and exploiting vulnerabilities, allowing them to scale their attacks quickly and efficiently. They can multitask and perform complex operations simultaneously. Traditional worms tend to follow a linear, step-by-step approach to propagation and exploitation, limiting their ability to scale and adapt quickly.

Potential Threats

With a capacity to disrupt critical infrastructure, AI worms pose an array of threats with far-reaching implications for cybersecurity and beyond. They can target essential services such as power grids, water treatment facilities, and healthcare systems, for instance. A successful attack on a core infrastructure could endanger lives and cause significant economic damage.

By infiltrating banking networks, AI worms can execute fraudulent transactions, steal sensitive financial data, and even manipulate stock markets. The financial losses from breaches of this nature can destabilize economies.

In terms of corporate espionage, AI worms can infiltrate corporate networks to steal intellectual property, trade secrets, and confidential business strategies. Successful data breaches can give competitors unfair advantages and result in financial losses for the affected organizations.

Similarly, nation-states could deploy AI worms to conduct espionage, steal classified information, or disable defense systems. Such attacks could compromise a country’s defense capabilities and give adversaries critical intelligence, potentially altering the balance of power on a global scale.

The threat extends to personal privacy, as well. AI worms can harvest vast amounts of personal data, including emails, photos, and sensitive documents stored on individual devices. The misuse of this data can lead to identity theft, blackmail, and other malicious activities, causing significant distress and harm to individuals.

In the context of supply chains, AI worms can infiltrate a single supplier’s network and propagate through interconnected systems, leading to production delays, compromised products, and significant financial losses for multiple organizations. The interconnected nature of modern supply chains means that a breach in one part can cascade across the network.

AI worms of course can be weaponized for political purposes. Hacktivist groups or politically motivated attackers might deploy them to disrupt elections, manipulate public opinion, or sabotage government operations.

Lastly, we can’t dismiss the psychological impact of AI worms. Nearly 2 in 5 cloud security professionals (38%) consider AI-powered attacks a top concern, according to The State of Cloud-Native Security Report 2024. But when asking this same group about AI-powered attacks compromising sensitive data, that number shoots up to 89%, more than doubling. The uncertainty surrounding the capabilities of AI-powered attacks gives many pause.

Source: Paloalto Networks, Linkedin, DH-Image

Also Read: